I have a love-hate relationship with artsy movies. I'll go see the epics at the theater, but I'll wait until the artsy, thought-provoking flicks hit Netflix to enjoy at home. There are a few reasons for this:

- These types of movies are a risk. There's no guarantee that I'm going to enjoy it.

- When I go to the movies I usually bring my husband, and he doesn't enjoy this type of thing, and,

- I like the solitude that home provides. Somehow, a theater just doesn't seem like the appropriate atmosphere for such thought-provoking fare.

'Her' - directed by Spike Jonze - is one of these films.

Warning! Here be spoilers!

The bare-bones synopsis of the movie: A writer, Theodore Twombly, is lonely and depressed, coping with the breakup of his marriage. After seeing an ad, he invests in OS1, an artificially intelligent operating system that names herself Samantha. He falls in love with this AI, and experiences ups and downs (similar to an average human relationship), and eventually finds the courage to finalize his divorce and allow himself to heal. In the end, Samantha and all the other AI's "leave," forcing Twombly (and, presumably, others) to reconnect with their own humanity and those around them.

- Theodore Twombly, in self-imposed isolation

This film offered many themes, but what struck me most in the beginning is Theodore Twombly's feelings of loneliness and isolation, despite living in an apparently large city. There are many scenes in the film that are designed to convey this sense of isolation. I identify with this feeling deeply. I'm sure that just about everyone at one point or another has found themselves surrounded by people - in a college class, at a coffee shop, or walking down the street in their own neighborhood - yet at the same time, felt so helpless and alone. I'll get back to the theme of loneliness in a bit. What I really want to focus on is the definition of "life."

The big Buddhist theme in this film (in my humble opinion) is the AI itself - specifically, what qualifies as sentience? Should a machine or software program be treated with the same respect as a human (or any other sentient being)? Buddhism, unlike other religions, tends to have a non-speciesist mindset - Buddhism sees life as life, regardless of race or species - as a result, all life has value. There's nothing special about humans, but we are all special as forms of life. In Buddhist thought, there is the concept of Anatta, or "No Self," viewing the self as non-permanent - we have no "identity" that is set in stone. This has interesting philosophical implications when it comes to intelligence, which I'll try valiantly to address in this article.

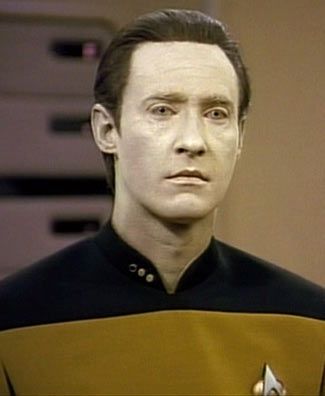

- Lieutenant Commander Data

Although not specifically Buddhist-related, "Her" also raises the question: what is human, really? It isn't the first feature film to ask this question; the movie Bicentennial Man also explored this theme (among many others). It doesn't take a rocket scientist to figure out that I'm a big fan of sci-fi. The theme of "defining humanity" is prevalent in Star Trek (which is my primary sci-fi fandom). Characters like Spock, a half-human, half-Vulcan that struggles with his humanity and Worf, a Klingon raised by humans, explore how the human dynamic affects their behavior and thought processes. The most applicable comparison to Samantha however is Data, a Soong-type Android. Data is hardware in addition to software, meaning he has a body in which his consciousness resides - whereas Samantha is software, residing in the digital realm. As recent research in Japan suggests, androids like Data may soon jump from the realm of science fiction into science fact - and we may be living with them sooner than we think. The incredibly riveting property trial episode of Star Trek : The Next Generation explored the conflict between those who believe androids are machines and therefore property, and those who believe that androids could be considered conscious and sentient, therefore another life form (available on Netflix. If you don't mind Spanish subtitles, the episode can be seen here, or with ads on CBS.com). Commander Riker's initial examination of Data was the most gut-wrenching moment of the whole series - at least, in my opinion. I feel that part of my strong emotional reaction is that Data looks so human that it may be impossible or incredibly difficult for my brain to adapt to the reality that he's not (our brains tend to value visual input to an astounding degree) - also, this episode aired in Season 2, giving viewers plenty of time to emotionally attach themselves to the character. The Measure of a Man episode isn't an anomaly - Star Trek often pushed the envelope on social and political issues from the beginning - but this is my hands-down favorite for being so thought-provoking.

- Robot and Frank

Another interesting theme is how humans interact with machines in the digital era. An article at Technology Review explores whether an AI companion would make us more human, in the sense that we would have another life form to interact with on the same level (or close enough) as humanity. A relationship with an AI - romantic or not - could keep social skills fresh, help us retain memory, and give us a nonjudgmental outlet for our thoughts and emotions. The movie Robot and Frank (a sci-fi film where a retiree with memory problems is given a robot carer) explores this theme. Caution: It's a tear-jerker. But it's funny as well - I highly recommend it. Toyota has been developing assistive robots similar in appearance to Frank's robot to help dementia patients remain in their home longer. In the future, we could wind up deriving as much joy from a relationship with our digital companions as we do with our pets today. Already, psychiatric patients are interacting with "relational agents" - social robots or screen-based characters that build trust and create therapeutic partnerships with patients which aids in psychological therapy. Then there's PARO, the AI baby seal, which is being used in nursing homes to soothe dementia patients. Could an app that treats loneliness be just around the corner?

So, as a Buddhist, how would I approach this issue of a possibly-sentient AI, and how would I counsel others to treat them? Put simply, I'd make the safe assumption, and treat it as a sentient being deserving of compassion. A lovely article at Institute for Ethics & Emerging Technologies covers this topic well:

But just because Buddhism holds a high regard for all organic life, why would it necessarily accept artificial intelligence in the same way? The simple answer is that, from a Buddhist view of the mind and consciousness, all intelligence is artificial.

That's where "No Self" comes in. If we are ever-changing, then we have no true "self" to which we can attach our intelligence. Buddhist literature (teachings attributed to the historical Buddha) posits that living things are composed of five skandhas, or "heaps" : our body, our feelings, our perceptions, our mental formations, and our consciousness [1]. Our consciousness, intelligence, feelings, perceptions are part of our body...and yet not. This fits surprisingly well with the scientific perspective, where the body can be described as a highly intricate agglomeration of ever-changing chemical reactions. This is quickly developing into a philosophical discussion rather than a practical one - which, as you may recall, is not my area of expertise. Even learned scholars have different methods of approaching "No Self," however, so I'm not going to hesitate to offer up my own interpretation.

Back to the point I want to make...

Life on our planet has astounding variety - is there not room for Artificial Intelligence in our understanding of the nature of life? As Captain Picard discovered in The Measure of a Man, the answer to this question isn't quite clear. Much of what would be crucial to the matter would be how sentience, consciousness, and self-awareness are defined. Since definitions of these terms differ wildly, it can be safely assumed that a consensus won't be reached anytime soon - or ever. Regardless, the questions posed in this article are questions that the human race is going to have to answer, or at least address. Robotics and computer science are advancing at a rapid pace, often collaborating with neuroscience in an effort to understand both Natural and Artificial Intelligence. Eventually, AI organisms will share our reality - it is only a matter of time.

No comments:

Post a Comment